Portfolio, program and project assurance framework

QGCDG is currently reviewing the layout of this document to better align with the Queensland Government design system.

On this page

Introduction

Purpose

This Queensland Government Enterprise Architecture (QGEA) framework is mandated by the Portfolio, program and project management and assurance policy, under the Financial and Performance Management Standard (2019). It provides information and defines best practice processes for Queensland Government departments to undertake assurance on all digital and ICT-enabled portfolios, programs and projects.

The framework applies to all Queensland Government employees (permanent, temporary and casual) working in a Queensland Government department and Queensland Government owned corporations including all organisations and individuals acting as its agents (including contractors and consultants).

Scope

Assurance is the objective examination of information for the purpose of providing an independent assessment on governance, risk management and control processes for an organisation.

The Portfolio, program and project assurance framework (‘the framework’) includes all types of project, program and portfolio assurance such as:

- point-in-time assurance activities such as gated reviews, health checks, portfolio assurance and Portfolio, Program and Project Management Maturity Model (P3M3®) assessments

- one-off assurance (such as deep dives)

- continuous assurance (such as project assurance and assurance partnering).

This framework is not a substitute for a rigorous portfolio, program and project governance framework that manages a department’s key processes. These include:

- business and strategic planning

- investment appraisal and business case management (including benefits management under the Financial Accountability Handbook: Volume 1 – Introduction)

- project, program or portfolio management

- risk management

- procurement and acquisition

- service and contract management.

Notes

This framework outlines the minimum assurance requirements for all digital and ICT-enabled portfolios and initiatives in Queensland Government. A department may undertake additional assurance activities if they wish to; these requirements will be outlined in the department’s assurance framework.

Role of the Office of Assurance and Investment

The Queensland Government requires visibility across the government’s investment in digital and ICT-enabled initiatives and assurance that the expected benefits will be delivered on time, to budget and in line with Queensland Government legislation and QGEA policy requirements. The Queensland Government expects initiative risks and issues to be transparent with departments acting on and mitigating problems before there is an impact on community and consumer outcomes and benefits.

The role of the Office of Assurance and Investment (OAI) is to monitor and report on all assurance activities undertaken by digital and ICT-enabled initiatives, on behalf of the Digital Economy Leaders Sub-Group (DELSG).

OAI receives and registers the following documents for profile level 2, 3 and 4 initiatives:

- the initial and subsequent versions of the initiative assurance profile

- the initial and subsequent versions of the initiative assurance plan

- the initiative’s gated assurance reports, health check reports and post-implementation review report.

Assurance principles

This framework adopts a principles-based approach to assurance. Principles are foundational and ‘universal truths’, which are:

- self-validating, because they have been proven in practice

- empowering, because they enable practitioners to apply effective and pragmatic assurance to different portfolios, programs and projects.

The five assurance principles are:

Assurance is planned and appropriate

Each initiative has an approved fit-for-purpose assurance plan which:

- aligns to the baselined schedule and critical path

- includes a budget for assurance in the initiative business case or the portfolio annual budget

- is periodically updated to reflect any changes in risk profile or lessons learned

- avoids duplication and overlap with other initiative information.

- defines and agrees the roles and responsibilities for assurance, including that of the Accountable Officer, investing department and those organising the assurance.

Assurance drives and informs good decision making

- Assurance provides a timely, reliable assessment to inform key investment decisions.

- Assurance is timed to occur before key decision points such as funding allocation, business case approval and operational go-live.

- Assurance information is clear and uses consistent definitions and standards to support comparisons over time (for example, using the standard delivery confidence ratings and priority ratings for recommendations in gated assurance).

- Accountable Officers and investing departments have full and unimpeded access to the assurance review team and their report and use assurance information to focus support, attention and resources where it is most needed.

Assurance is objective and independent

- The independence of the assurance review team members is determined in accordance with the principles of the Three Lines Model (see Appendix A), where an assurer cannot assure their own work.

- Those undertaking assurance are not involved in the delivery of initiative products, nor do they make initiative management decisions.

- Assurance is provided by suitably qualified reviewers with the right skills and experience to assure the scale and complexity of the investment.

- Conflicts of interest for the review team are identified and managed.

Assurance is supported by senior management and a culture of continuous improvement

- The Accountable Officer is open to external scrutiny and constructive challenge, and this behaviour is expected of their teams.

- The Accountable Officer and key stakeholders actively engage in assurance activities, ensuring any planned reviews remain fit for purpose throughout the initiative lifecycle.

- Assurance is actively promoted as a valuable process in securing the successful delivery of expected benefits.

- Implementation of assurance report recommendations are actively planned and reported.

- The review team is supported by the Accountable Officer to access the people and resources they require.

Assurance is forward-looking and focused on risks to benefits realisation

- Assurance supports the Accountable Officer and investing department to identify and communicate key risks and opportunities likely to impact realisation of expected benefits.

- The assurance activities outlined in this framework are not conducted as audit activities.

- Assurance reviews use available information from a range of sources that reflects the delivery approach, scale and complexity of the initiative.

- Assurance looks for, and considers, information from a range of sources including documents, interviews, reports and visual displays.

Assurance processes for initiatives

The assurance process consists of four activities – starting with assurance profiling, assurance planning, assurance reviews and lastly, reviewing the action plan implementation and reporting.

1. Assurance profiling

The assurance profiling step is used to determine the minimum assurance requirements and the degree of independence of the review team members. Assurance profiling occurs at the commencement of an initiative. The assurance profile should then be periodically re-assessed when there are significant changes to the initiative such as changes to project scope, complexity, impact and/or risks. The assurance profile is independently re-validated as part of any gated assurance review or health check review.

All Queensland Government digital and ICT-enabled initiatives must undertake assurance profiling to determine their assurance profile level, which ranges from level 1 (being the lowest rating) to a level 4 (being the highest rating).

The assurance profile is used by OAI to determine those initiatives that will be subject to the Queensland Government investment review process.

All assurance profiles are registered with OAI prior to Gate 1 – Preliminary evaluation and again at Gate 3 – Contract award for projects, and prior to the Gate 0 – Initial for a program. Once the profile is noted by OAI, assurance planning for the next project stage or program tranche can commence.

Notes

Each program, and each of its projects, is required to complete the assurance profiling tool. A program can only be a profile level 3 or higher. The assurance level of the program will reflect the highest assurance profile level of its component projects.

Assurance profiling tool

Assurance profiling involves answering a series of questions to determine initiative complexity and impact. This activity should be undertaken with the Accountable Officer, board members, and key stakeholders with a good knowledge of the initiative and the technical and business challenges.

Tip

A good assurance profile includes:

- explanatory notes to justify the chosen attribute level to avoid ‘gaming’ the profile level

- covers the full scope of the initiative, not just the current stage, for example, only the development of the business case

- gaining consensus amongst key stakeholders regarding the initiative impact, complexity and risks (threats and opportunities) to inform the appropriate profile level

- approval by the initiative’s Accountable Officer.

Assurance profile levels

Four assurance profile levels are defined. Each progressive assurance level supports an increasing level of assurance activity, scrutiny, and independence of the review team members.

For example, profile level 1 is applied to less complex initiatives that may, for example, be undertaken by existing departmental resources and funded by capital or operational budgets. A profile level 4 initiative represents highly complex or transformational change initiatives that may, for example, involve several agencies, be the subject of Ministerial interest, deliver on an election commitment and/or require tied funding such as Cabinet Budget Review Committee (CBRC) funding or grants.

Profile level

Level 1 (lowest)

These projects involve ‘known knowns’. Projects take a ‘sense-categorise-respond’ approach to establish the facts (‘sense’), categorise the plausible solutions, then respond by applying project best practice.

In these projects the relationship between the business problem and solution is relatively clear. A similar project may have been undertaken previously that can inform the delivery approach and there is high confidence in estimates for duration and cost.

The timeframe may be relatively short – for example within a financial year. They may be funded either from capital and/or existing operational budgets.

Level 1 projects are not ‘business-as-usual’; they are still a temporary endeavour, and there are perceived advantages to applying project management to govern, direct and report them. As a minimum, they will have a project manager and an Accountable Officer appointed

Level 2

These projects represent the ‘known unknowns’.

The relationship between business problem and solution requires analysis or expertise from subject matter experts; and there are a range of plausible solutions. There is some degree of solution complexity.

The initiative takes a ‘sense-analyse-respond’ approach to assess the facts, analyse the solutions, and apply tailored project management best practice.

Level 2 projects may have some very specific delivery dates to be delivered within one to a few years. Project estimate confidence remains high with a small plus/minus range based on a well-defined scope.

Level 3

These initiatives represent ‘unknown unknowns’.

The relationship between business problem and solution is ambiguous and there may be considerable complexity in the system architecture and business process changes required.

Initiatives probe first, then sense, and then respond to determine a demonstrable value for money solution to the business problem.

The impact and complexity of these initiatives is high.

Level 4 (highest)

These initiatives represent high value and high-risk strategic investments.

The relationship between business problem and solution is unclear.

Initiatives take an ‘act-sense-respond’ approach to determine a demonstrable value for money solution to the business problem.

These initiatives may involve challenging timeframes with firm political, legislative or technical deadlines, and a high level of public interest. Estimate confidence may be low because of a large number of assumptions or unknowns. The initiative may involve a number of departments in delivery and benefit realisation.

2. Assurance planning

Assurance planning is the second activity in the assurance process.

Note

All Queensland Government initiatives must complete and maintain, an assurance plan for the life of the initiative including for Gate 5 - Benefits realisation, where applicable. The assurance plan will outline what assurance activities will be undertaken, when it is timed to occur, considers who will be undertaking the assurance, and what the budget will need to be.

The approved assurance plan, and subsequent versions, must be registered with OAI.

Assurance for an initiative should be planned to reflect:

- the initiative assurance profile level

- the baselined schedule, critical path and timing of key decision points

- the initiative risks (threats and opportunities).

The first version of the assurance plan should be prepared when starting up an initiative, after the approval of the assurance profile. It should be completed in consultation with the Accountable Officer and include a budget for assurance. The assurance plan will be influenced by who is able to undertake the reviews and the number of reviews that are planned in line with the minimum assurance requirements.

The Accountable Officer approves the final draft version of the plan and any subsequent updates. The plan will need to be maintained with current dates and any changes to planned reviews.

Guidance for projects

Profile level

Level 1

Level 1 projects require minimal assurance through health checks.

A Gate 3 assurance review will be required if the project is planning to enter into a new contract or extend an existing contract for the provision of digital or ICT systems and software.

Ongoing project assurance occurs as part of project board roles and their respective business, user and supplier assurance responsibilities to ensure compliance with all required Queensland Government and departmental policy requirements.

Level 2

Level 2 projects undertake gated assurance and health checks if it is more than six months between gated assurance reviews.

Level 2 projects may require a Gate 3 review to be repeated, once before pilot implementation and again to confirm the investment decision is appropriate prior to contract execution and/or full funding commitment and implementation.

Level 2 projects may require a Gate 4 review to be repeated if there are multiple significant go-live releases or different site implementations.

The Gate 5 assurance review is undertaken if the project has claimed any financial, economic and/or productivity benefit types.

Ongoing project assurance occurs as part of project board roles and their respective business, user and supplier assurance responsibilities to ensure compliance with all required Queensland Government and departmental policy requirements.

Level 3

Level 3 projects must undertake gated assurance reviews and health checks if it is more than six months between gated assurance reviews.

Level 3 projects may require a Gate 3 review to be repeated, once before pilot implementation and again to confirm the investment decision is appropriate prior to contract execution and/or full funding commitment and implementation.

Level 3 projects may require a Gate 4 review to be repeated if there are multiple significant go-live releases or different site implementations.

Ongoing project assurance occurs as part of project board roles and their respective business, user and supplier assurance responsibilities to ensure compliance with all required Queensland Government and departmental policy requirements.

Level 4

Level 4 projects must undertake gated assurance reviews and health checks if it is more than six months between gated assurance reviews.

Level 4 projects may require a Gate 3 review to be repeated, once before pilot implementation and again to confirm the investment decision is appropriate prior to contract execution and/or full funding commitment and implementation.

Level 4 projects may require a Gate 4 review to be repeated if there are multiple significant go-live releases or different site implementations.

Ongoing projects assurance occurs as part of project and program board roles and their respective business, user and supplier assurance responsibilities to ensure compliance with all required Queensland Government and departmental policy requirements.

Minimum assurance requirements

The minimum assurance requirements for both programs and projects based on the approved profile level are shown in the table below. A health check must be undertaken for profile level 2 or above initiatives if it has been more than six months between gated assurance reviews. Internal program and project assurance undertaken within the initiative representing the business, supplier and user interests will be ongoing as part of program and project board roles.

Note

All projects complete a post-implementation review in the project closure stage to confirm achievement of project deliverables, outcomes, benefits and lessons learned. Other forms of assurance, such as deep dive reviews and assurance partner or continuous/integrated assurance are optional for all initiatives and applied as needed.

Projects

| Review / Service | Level 1 | Level 2 | Level 3 | Level 4 |

|---|---|---|---|---|

| Gate 11 | yes | yes | yes | |

| Health check - early stage2 | yes | |||

| Gate 2 | yes | yes | yes | |

| Gate 33 | as required4 | yes | yes | yes |

| Gate 45 | yes | yes | yes | |

| Health check - pre-go live | yes | |||

| Post implementation review6 | yes | yes | yes | yes |

| Gate 5 (initial, mid-stage, final) | optional7 | yes | yes | |

| General health check (if more than six months between gated reviews | optional | yes | yes | yes |

| Project assurance (business / user / supplier) | yes | yes | yes | yes |

| Deep dive review(s) | as required | as required | as required | as required |

| Integrated/continuous/assurance partner assurance8 | optional | optional | optional | optional |

- A Gate 1 - Preliminary Evaluation and Gate 2 – Business Case review can be undertaken only once. In addition, Gates 1 and 2 cannot be combined as each serves a specific purpose.

- The early stage health check is undertaken at the end of the initiation stage to inform the decision to move to the delivery stage. More details on health checks are provided in Appendix B.

- A Gate 3 – Contract Award can be repeated, once before pilot implementation and again to confirm the investment decision is appropriate prior to full funding allocation and implementation.

- A Gate 3 – Contract Award will need to be undertaken if the initiative is planning to enter into a new contract or extend an existing contract for the provision of digital or ICT systems and software.

- A Gate 4 – Readiness for Service once the investment decision is made and can be repeated per site implementation depending on complexity and risk.

- The post implementation review is undertaken in the project closure stage. More details on PIRs are in Appendix B.

- Gate 5 – Benefits Realisation will apply when there are claimed financial, economic and/or productivity benefit types identified at Gate 3 – Contract Award.

- More details on the integrated/continuous/ assurance partner assurance activity is provided in Appendix B.

Programs

| Review | Level 3 | Level 4 |

|---|---|---|

| Gate 0 - initial (occurs at least once)1 | yes | yes |

| Gate 0 - mid-stage (multiple)2 | yes | yes |

| Gate 0 - final (occurs once) | yes | yes |

| General health check (if more than six months between gated reviews) | yes | yes |

| Program assurance (business/user/supplier) | yes | yes |

| Deep dive review(s) | as required | as required |

| Integrated/continuous/assurance partner assurance | optional | optional |

- The initial Gate 0 review occurs as the program nears the end of the Identify process and/or the initial Plan Progressive Delivery process using Managing Successful Programs (MSP® 5th edition).

- The mid-stage review can be repeated multiple times during the life of the program. This review should be completed at the end of each tranche, incorporating each project within the tranche.

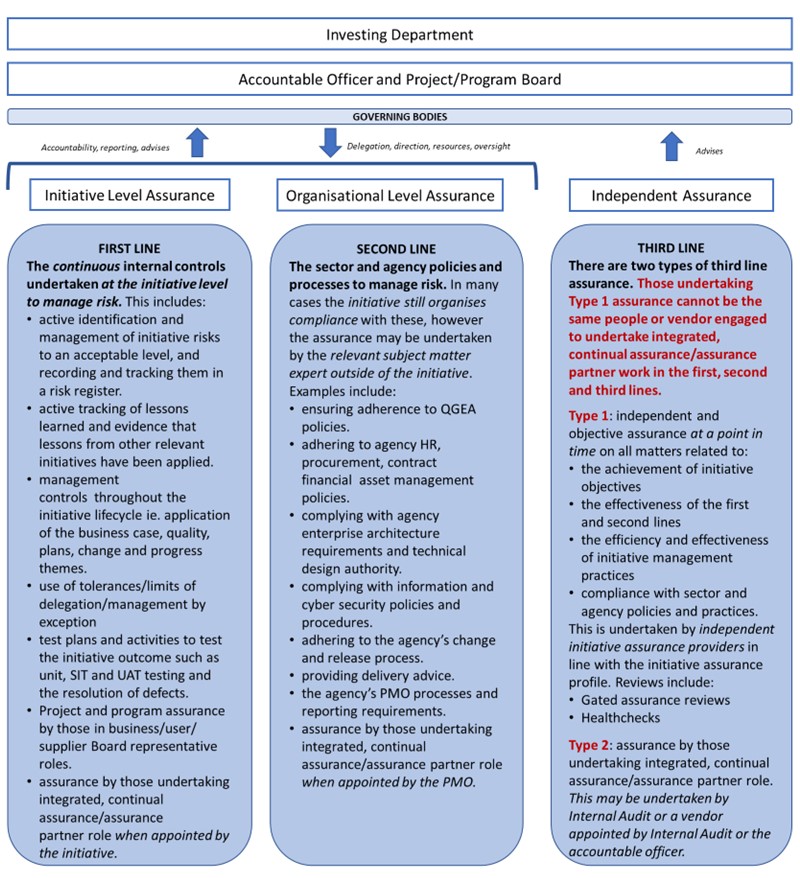

Minimum independence requirements

This framework applies the principles of the Institute of Internal Auditors’ Three Lines Model to determine if the chosen reviewer has the appropriate level of independence for the type of assurance activity. The Three Lines Model distinguishes between:

- continuous controls and assurance undertaken by the initiative team and within the initiative to manage risks (first line)

- assurance undertaken by subject matter experts outside the initiative such as the portfolio management office (PMO) and other departmental teams to manage risks (second line)

- the independent assurance undertaken at points in the initiative lifecycle such as gated assurance and health checks (third line).

The Three Lines Model must be considered when planning the initiative’s assurance as it will guide who can undertake which assurance activities. More details on the Three Lines Model are provided in Appendix A.

Note

At a minimum, the review team leader must be a certified Gateway review team leader and have experience and credentials in the QGEA portfolio, program and project management policy requirements. Review team members must also be certified Gateway review team members.

| Review team | Level 1 | Level 2 | Level 3 | Level 4 |

|---|---|---|---|---|

| Internal to department and external to initiative | yes | yes | no | no |

| External to department and within government | yes | yes | no | no |

| External vendor external to government | yes | yes | yes | yes |

Level 1

Projects may be assured by certified Gateway reviewers internal to the department and external to the initiative. They may, for example, be within the department’s portfolio, program or project management office however they should not be involved in any way with project delivery, or advising on the delivery, of the initiative. An external assurance provider may be used where required, for example, due to capacity or capability constraints.

Level 2

Projects can be assured by certified Gateway reviewers internal to the department and independent to the initiative. However, some level 2 projects can be quite large in their scope and have considerable complexity. When planning the assurance for these projects consider the experience and credentials of the internal assurance reviewers. Once again, the review team may comprise of reviewers from the department’s portfolio, program or project management office however they should not be involved in any way with delivery, or advising on the delivery, of the initiative. The Cross Agency Assurance Working Group is able to undertake assurance reviews for Level 2 projects (subject to availability). An external assurance provider may be used where required.

Level 3 and 4

Profile level 3 and 4 initiatives require greater independence and scrutiny by the review team members. This requirement applies to both gated assurance reviews, health checks and post implementation reviews. Profile level 4 initiatives also require a larger review team with four review members to allow for the necessary subject matter expertise on the review team (also see Appendix C for details).

These initiatives should engage an appropriate external vendor for their assurance reviews by using the Standing Offer Arrangement.

External assurance providers (vendors)

Given assurance requires a minimum degree of independence, consider whether the chosen vendor is planned to be engaged or contracted in another capacity for the initiative.

Note

The same external assurance provider (vendor) cannot be engaged to undertake gated assurance reviews and health checks if it has been involved in the same initiative in any other capacity.

Such activities may include involvement with program or project delivery (including the production or review of any management deliverables, for example, business case or specialist product) or acting as an assurance partner/undertaking integrated/continuous assurance on the initiative.

Program and project schedule alignment with key decision points

The baselined initiative schedule showing the critical path should inform when key decision points are required and dictate the timing for assurance activities. Gated assurance reviews must occur prior to the Accountable Officer’s decision to continue, discontinue, vary the scope for implementation or enter into a contract.

Tip

Timing is important. The review team should have enough current information to inform the review and recommendations, and the initiative requires sufficient time after the review to implement and fully resolve the most urgent recommendations prior to the next decision point. Key decision points can include commitment of initial funding, commitment of further funding, contract signing and the decision to go-live.

Individual gate reviews are timed to coincide with a specific point in the initiative lifecycle. As such, areas of focus for each of the reviews changes as the initiative progresses through its lifecycle.

Note

No gated review should be combined for expediency, for example, Gate 2 and 3 reviews as each review has a specific focus based on the iterative development of the business case and the project stage.

For a program, the Gate 0 review should be timed to occur at major investment decision points, for example, at the end of a program tranche or just prior to the drawdown of capital and/or operational funding. A Gate 0 review may be combined with its component project gate reviews if they are planned to be undertaken at the same time. This allows the review team to undertake assurance at both the program, for example, Mid Gate 0 and project levels (for example, Gate 4) concurrently allowing some leverage of both the review team and stakeholder’s time and avoid doubling up of review activity just prior to key decision points.

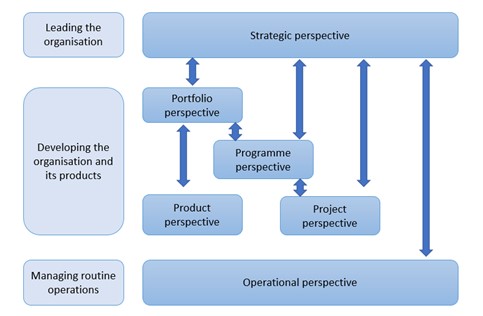

Applying risk management across assurance

Management of Risk (M_o_R®) is unique in explicitly considering the similarities and differences of applying risk management across the strategic, portfolio, program, project, product and operational perspectives. Depending on the complexity of the program and project, each of these perspectives may be relevant or a sub-set from an assurance perspective.

For example, an initiative may have specific risks regarding resourcing availability, technical risks regarding the chosen solution or testing plan, security, or data migration. There may be other risks surrounding procurement or vendor contracts, or risks regarding the identification and quantification of benefits or their realisation.

Note

In these circumstances an initiative can benefit from a specialist assurance review such as a ‘deep dive’. The need for these reviews should be considered as part of the assurance planning process.

Figure 1: Typical integration of risk information across the M_o_R® 4 perspectives (M_o_R®4 Management of Risk: Creating and protecting value, AXELOS 2022). Arrows represent indicative risk information flows between perspectives. These will vary depending on which perspectives are relevant to the organisation

Agile

The QGEA Portfolio, program and project management and assurance policy states “Departments must use project, program and portfolio management methodologies endorsed by the Queensland Government Customer and Digital Group (QGCDG), to direct, manage and report all digital and ICT-enabled programs and projects.”

QGCDG currently endorse the AXELOS Global Best Practice guidance, namely Managing Successful Programmes 5th edition, Managing Successful Projects with PRINCE2® 7th edition and PRINCE2 Agile®.

Note

PRINCE2 Agile® uses Scrum, Lean Start-up and Kanban and states that “PRINCE2 Agile® is not a substitute for PRINCE2®”.

As such, the minimum assurance requirements will still apply following the Queensland Treasury Gateway Review process where PRINCE2 Agile® is used.

Note

If the initiative is using PRINCE2 Agile®, at least one review team member should have practitioner certification in the guidance.

Gated assurance reviews

The assurance review is the third activity in the assurance process. This section focuses on gated assurance reviews; guidance regarding other initiative assurance activities is at Appendix B.

Gated assurance reviews are an assurance methodology that examines programs and projects at key decision points in their lifecycle to provide assurance that they can progress to the next phase or stage. It provides independent guidance and recommendations to Accountable Officers and investing departments on how best to ensure that initiatives are successful.

Gated reviews are an independent peer review of the initiative’s viability and feasibility. Gated assurance reviews are not an audit; whilst they consider progress to date they are forward looking and provide recommendations based on findings on what is needed to help take the initiative forward. A gate review will not stop an initiative but informs Accountable Officers. It is a high-level review at a point in time.

The gated review process is a third line assurance activity and increases confidence in:

- alignment with Queensland Government and departmental strategic objectives

- investment decisions

- delivery of initiatives to time and budget with the realisation of benefits.

Triggers for gated reviews in projects

| Gated review | Trigger | Decision |

|---|---|---|

| Gate 1 - Preliminary evaluation | Occurs once the preliminary business case (or similar document) with multiple options to resolve a business problem is developed including a benefits map but before the project board approve the preliminary business case and give authority to proceed. | The Accountable Officer confirms the project meets a business need, is affordable, achievable with appropriate options explored and likely to achieve value for money. Key decision relates to consideration that the project can move out of the start up process |

| Gate 2 - Business case | Occurs once the full business case and the preferred way forward is fully analysed in terms of costs, risks and benefits to determine value for money including benefit profiles (benefits plan) but before a project board or similar group approval. | The Accountable Officer confirms project scope and viability in terms of robust costs, risks and benefits. Also determines if funding is available for the whole project, the potential for success, value for money to be achieved and the proposed delivery approach. |

| Gate 3 - Contract award | Occurs before placing a work order with a supplier or other delivery partner, or at preferred supplier stage before contract negotiations. The final business case is fully informed following the procurement activity and total project and ongoing costs are fully known before the project board decides to proceed. A Gate 3 review may occur before a pilot implementation or initial design contract is undertaken. A subsequent Gate 3 review may also be required to confirm the investment decision before full funding allocation and implementation. | The Accountable Officer confirms the project is still required, affordable and achievable. Decision needs to be made on whether to sign a vendor contract. |

| Gate 4 - Readiness for service | Occurs once all testing is complete and test summary reports are prepared but prior to business go-live and/or release into production. This review may need to be repeated if there are multiple go-lives planned depending on each go-lives’ scale and functionality. | Decision is to determine if the solution is ready for operational service. This is a key review and provides assurance that the solution itself and the business itself are ready for implementation. |

| Gate 5 - Benefits realisation | Occurs between 6-12 months after handover of the project outcome to the new business owner to determine if benefits are starting to be realised and the set up for ongoing solution maintenance. The timing of the first Gate 5 review will be confirmed at the Gate 4 review by the Accountable Officer to coincide with any decision points following project closure and in line with the benefits realisation plan. A Gate 5 review will also consider the operational resourcing needed, how the project outcomes are performing and any ongoing contract management. A Gate 5 review can be repeated several times over the life of the operational service. | Determines if the operational service is running effectively or if changes need to be made. If the project is part of a program it will inform if further work is required by the program to realise benefits. |

Review team size and qualifications

Appendix C provides details on the required review team size and qualifications.

Review timelines

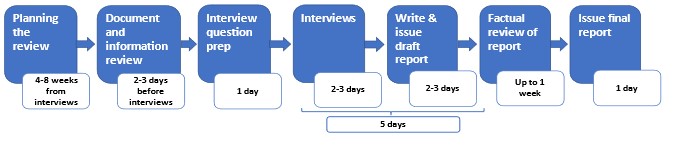

A gated assurance review involves four main activities:

- planning the review

- conducting the document and information review

- undertaking interviews

- preparing the draft and final report.

Further details on these activities and timeframe requirements are at Appendix C.

Requirements for the review report

Details on the requirements for the review report are at Appendix D.

3. Review action plan

This activity consists of developing the review action plan based on the review report recommendations including the timely implementation and reporting of progress to the Accountable Officer.

Drafting the action plan

Following receipt of the final report the Accountable Officer distributes the report recommendations to the Project/Program Manager to develop an appropriate course of action. The action plan includes details on how the prioritised recommendations will be redressed, by whom and by when based on the target resolution timeframes.

Note

The expectation is that each of the recommendations will have an appropriate response approved by the Accountable Officer.

4. Implementation and reporting

The implementation and reporting of progress against the recommendations is the final activity in the assurance process. The value from undertaking assurance is realised when the assurance review recommendations are fully implemented and resolved. Progress against the action plan should be tracked and included in the initiative reporting.

Progress on assurance review recommendations is considered as part of the next following gated assurance review or health check.

Portfolio assurance

A portfolio is the totality of the department’s investment in the changes required to achieve its strategic objectives (Management of Portfolios, AXELOS 2014). Business change can be delivered by a range of activities, including everything from a simple change in a business process step, through to complex initiatives. For the purposes of this framework though, a portfolio is taken to include the initiatives delivered as programs and projects.

Portfolios are different to initiatives in that they are perpetual. The portfolio composition is determined by investment decisions that enable the optimal mix of organisational change and business as usual, whilst working with available funding. Managing the portfolio includes the strategic decisions and processes to select the initiatives, control the portfolio and monitor progress.

Portfolio assurance is an optional assurance activity for departments.

Areas of focus for the review

An assurance review of a portfolio gives independent guidance to Accountable Officers, senior leaders and portfolio teams on how best to ensure the portfolio will enable the realisation of government policy, strategy and objectives and achieve value for money. A portfolio assurance review does not focus on the likelihood for successful delivery of the whole portfolio or any individual initiative within the portfolio. While the process for completing assurance reviews for portfolios, programs and projects is similar, the content and what they examine as part of the review, and the skills needed on the review team are different. Rather, a portfolio assurance review is about the department’s ability to manage a portfolio of investments and focuses on providing a realistic view on the portfolio’s ability to:

- manage the portfolio’s strategic alignment with Queensland Government objectives and appropriate delivery in line with funding and people constraints

- monitor and respond to performance and risk (threats and opportunities) across the portfolio

- build the right culture and processes to manage the portfolio; and

- understand and respond to the capability and capacity requirements for the portfolio.

Review rating

A portfolio assurance review report will have four ratings as outlined below – one for each assurance topic and one for the portfolio overall. All four ratings use the same ratings scale and are a maturity assessment combined with an assessment of how the purpose of that practice area is being achieved.

| Rating | Description |

|---|---|

| Initial | The practices have not been fully implemented or do not fully achieve their purpose. |

| Performed | The practices are performed though are not fully managed or defined and are starting to achieve their purpose in some areas. |

| Managed | The practices are planned, monitored, and adjusted against a defined process and are achieving their purpose in most areas. |

| Optimised | The practices are optimised and continuously improved and achieve their purpose. |

Review specifics

A portfolio review follows the same process as that for initiative reviews and involves:

- engaging a review team

- agreeing a terms of reference and holding a planning meeting

- interviewing stakeholders and reviewing documentation

- completing a review report with four ratings (see further information above) and recommendations marked as critical, essential and recommended.

A portfolio review can be undertaken at any time. There can be benefit from repeating a portfolio review at two- or three-year intervals to understand any movements in maturity.

The independent review team should consist of three to four individuals with experience in portfolio management, organisational strategy development, planning and financial management with at least one review team member holding a Practitioner certification in AXELOS Management of Portfolios (MoP®).

Further guidance

Further guidance on undertaking portfolio assurance reviews can be found in the UK government’s Infrastructure and Project Authority Portfolios and Portfolio Management Assurance Workbook.

Assurance Workbook: Portfolios and Portfolio Management - GOV.UK (www.gov.uk)

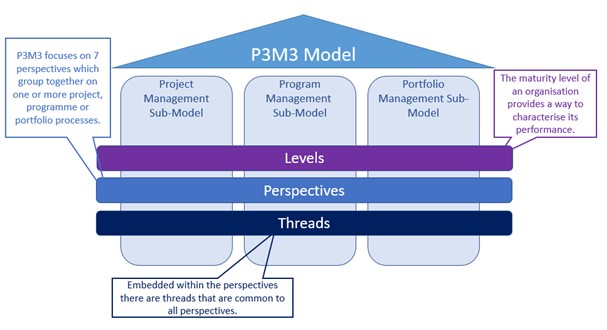

Portfolio, Program and Project Management Maturity Model (P3M3®) review

P3M3® is a framework for assessing and benchmarking your department’s current performance and developing plans for individual capability and organisational maturity improvement. The whole organisation, or specific areas of the organisation, can be assessed to determine the capability of the portfolio, program and project teams. P3M3® is unique in that it considers the whole system and not just the processes. It analyses the balance between the process, the competencies of the people who operate it, the tools that are deployed to support it and the management information used to manage delivery and improvements.

P3M3® comprises of 3 maturity sub-models - the portfolio (PfM3®), program (PgM3®) or project (PjM3®) – and you can choose to assess one, two or all three of these.

Figure 2: The P3M3® model

Areas of focus for the review

The P3M3® model can best be thought of as a three-dimensional cube built on perspectives, threads and levels.

There are 7 perspectives. The perspectives are the main process groups and they form the basis for reporting.

- Benefits management – ensures that the desired business change outcomes have been clearly defined, are measurable and are ultimately delivered.

- Finance management – ensures that the likely costs, both capital and operational, of delivering programs and projects are captured and evaluated within a formal business case and that costs are categorised and managed.

- Management control – covers how the direction of travel is maintained throughout the project, program or portfolio management lifecycle.

- Organisational governance – looks at how the delivery of projects, programs and portfolios is aligned to the strategic direction of the organisation.

- Resource management – covers management of all types of resources required for delivery.

- Risk management – the process to systematically identify and manage opportunities and threats.

- Stakeholder management – includes communications planning, the effective identification and use of different communications channels, and techniques.

The 13 threads describe common themes across the perspectives and help to identify process strengths and weaknesses. The 13 threads are:

- asset management

- assurance

- behaviours

- commercial commissioner

- commercial deliverer

- information and knowledge management

- infrastructure and tools

- model integration

- organisation

- planning

- process

- standards

- techniques.

Review rating

A P3M3® assessment will determine a maturity assessment for each of the three sub-models. A maturity level enables the identification of an improvement pathway – this will be a long-term strategic commitment to continuous improvement. The maturity levels can be briefly summarised as follows (AXELOS P3M3® Overview):

| Rating | Description |

|---|---|

| Level 1: Awareness of process | Processes are not usually documented. There are no, or few, process descriptions. Actual practice is determined by events or individual preferences and is subjective and variable. Level 1 organisations often over-commit, abandon processes in a crisis and are unable to repeat past successes consistently. There is little planning and executive buy-in, and process acceptance is limited. |

| Level 2: Repeatable process | Management may be taking the lead on a number of initiatives but there may be inconsistency in the levels of engagement and performance. Establishment of basic management practices can be demonstrated and processes are developing, however each program and project may be run with its own processes and procedures to a minimum standard. |

| Level 3: Defined process | Management and technical processes will be documented, standardised and integrated to some extent with other business processes. There is likely to be process ownership with responsibility for maintaining consistency and improvements across the department. Management are engaged consistently and provide active and informed support. There is a universally common approach in place and an established training and development program to develop skills and knowledge. |

| Level 4: Managed process | Behaviour and processes are quantitatively managed. The data collected contributes to the department’s overall performance measurement framework and is used to analyse the portfolio and understand capacity and capability constraints. Management will be committed, engaged and proactively seeking innovative ways to achieve goals. |

| Level 5: Optimised process | The department will focus on optimisation of its quantitatively managed processes to take into account predicted business needs and external factors. It anticipates future capacity demands and capability requirements to meet delivery challenges. The department will be a learning organisation, learning from past reviews and this will improve its ability to rapidly respond to changes and opportunities. There will be a robust performance management framework. The department will be able to demonstrate strong alignment of organisational objectives with business plans and this will cascade into scoping, sponsorship, commitment, planning, resource allocation, risk management and benefits realisation. |

Note

The Portfolio, program and project management and assurance policy requires departments to maintain an appropriate P3M3® level to provide a prioritised and balanced portfolio of change and ensure consistent delivery of initiatives across Queensland Government. It advises that the P3M3® Level 3: Defined process is an appropriate level to provide the department with centrally controlled processes and enables portfolios and individual initiatives to scale and adapt these processes to suit their circumstances. However, departments are encouraged to undertake a risk assessment and determine an appropriate target maturity level to achieve and maintain. A P3M3® Level 1: Awareness of process may be appropriate.

Review specifics

A certified assessment is undertaken by an AXELOS Consulting Partner. The AXELOS Consulting Partners can be located on the AXELOS website. Before undertaking a P3M3® assessment a few decisions will need to be made.

- Which P3M3® maturity model will be used – portfolio, program or project maturity? If the department is focused on wanting to improve project management the PjM3 model will provide all of the necessary information needed.

- Scoping the assessment – for example – projects can be delivered within different divisions of the department. Will the assessment be undertaken within one main project delivery area, or across the department?

Three Lines Model

This framework applies the principles of the Institute of Internal Auditors’ Three Lines Model to determine if the chosen reviewer has the appropriate level of independence for the type of assurance being undertaken. This model must be considered when determining who is able to undertake different assurance activities. For example, if internal audit or a vendor is engaged to undertake continuous assurance/integrated assurance/assurance partner activities they cannot be engaged to undertake the gated assurance reviews or health checks for the same initiative.

Other initiative assurance activities

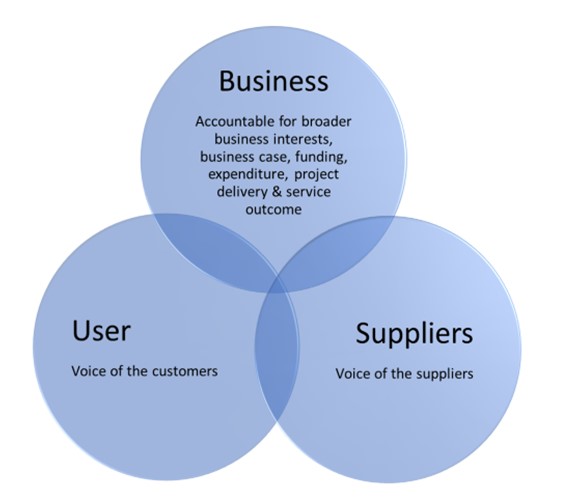

Project and program assurance

In line with Managing Successful Projects with PRINCE2® 7th edition and Managing Successful Program 5th edition guidance, the board is responsible for the assurance of their respective areas, via its project/program assurance roles, for monitoring all aspects of the projects and programs performance and products independently of the project/program manager. Board members are responsible for the aspects of the assurance role aligned with their respective areas of concern (that is, business, user or supplier). They may delegate this assurance to others to conduct on their behalf, however they are still accountable for the assurance activity aligned with their area of interest.

Project and program assurance is undertaken at the initiative level and is ongoing for the life of the initiative.

Figure 3: Areas of focus for project and program assurance

Further guidance on project assurance is provided within the PRINCE2® guidance however some key activities are included below.

Initiative assurance activities include ensuring:

- there is ongoing liaison between the business, user and supplier interests

- risks (both threats and opportunities) are controlled

- the right people, with the right skillsets are involved with the initiative

- quality methods and applicable standards are being followed

- internal and external communications are working

Business assurance responsibilities include:

- assisting the project manager to develop the business case and benefits management approach (if it is developed by the project/program manager)

- reviewing the business case and ensuring it aligns with Queensland Government legislation and QGEA policy requirements throughout the lifecycle

- verifying the initiative continues to provide value for money and remains viable, achievable and desirable.

- securitising and monitoring performance and progress against agreed objectives.

User assurance responsibilities include:

- advising on stakeholder engagement and the communication management approach

- ensuring that the user specification is accurate, complete and unambiguous

- assessing if the solution will meet the user’s needs and is progressing towards that target

- advising on the impact of potential changes from the user’s perspective.

Supplier assurance responsibilities include:

- reviewing the product descriptions

- advising on the quality management and change control approaches

- advising on the selection of the development strategy, design and methods

- advising on potential changes and their impact on the correctness, completeness and integrity of products against their product description from a supplier perspective.

Health checks

The general health check review is a short, point in time, objective assessment of how well the initiative is performing relative to its agreed investment objectives and QGEA and departmental policies, principles and standards. Health checks are similar and complementary to gated reviews, however gated reviews are undertaken prior to a key decision point in the initiative’s lifecycle.

General health check reviews provide independent guidance to Accountable Officers and investing departments by identifying a broad range of risks (threats and opportunities) and issues that may impact successful delivery.

Health checks can be undertaken for both projects and programs.

Areas of focus for the review

A general health check review is structured to assess the seven P3M3® perspectives namely:

- organisation governance

- management control

- benefits management

- risk management

- stakeholder management

- finance management

- resource management.

In addition to the P3M3® perspectives, a health check can also be tailored to assure specific aspects of the initiative that the Accountable Officer would like considered.

Triggers for health checks

For level 1 projects a health check is conducted at an early stage in the project’s lifecycle, just prior to the end of the initiation stage, to determine how the project is set up for success. A second health check is undertaken prior to full funding allocation and/or go-live/implementation.

For Level 2, 3 and 4 projects and programs the timing is considered with the planned gated assurance reviews. For example, a health check is undertaken if it is more than six months between gated assurance reviews, however you would avoid scheduling a health check within three months before the next planned gated assurance review.

Health checks can also be undertaken at the discretion of the Accountable Officer and/or Board members representing business, user and supplier interests. Triggers for health checks include:

- when the Accountable Officer has specific area/s of concern that requires review

- where an initiative is reporting an overall amber or higher ‘red, amber, green’ status for three consecutive months or more

- where a review team recommends a health check to be completed before the next key decision point

- if there is an overall negative delivery confidence assessment and there are a considerable number of critical and essential recommendations raised at the gated review. An action plan health check, undertaken by the same review team within three months of the previous review, would subsequently focus on ensuring recommendations have been fully resolved.

- if insufficient progress is being demonstrated in resolving recommendations from a previous assurance activity.

- where there is a major incident or event to the initiative environment including change of governance and accountability.

Review team size and qualifications

Appendix D provides details on the required review team size and qualifications.

Review timelines

A health check involves four main activities:

- planning the review

- document and information review

- undertaking interviews

- preparing the draft and final report.

Further details on these activities and timeframe requirements are at Appendix D.

Requirements for the review report

Details on the requirements for the review report are at Appendix E.

Action plan

Following receipt of the final report the Accountable Officer distributes the report recommendations to the Program/Project Manager to develop an action plan. The action plan includes details on how the prioritised recommendations will be redressed, by whom and by when based on the target resolution timeframes.

Note

The expectation is that each of the recommendations will have an appropriate response approved by the Accountable Officer. Progress on the health check recommendations is considered as part of the next following gated assurance review or health check.

Deep dive reviews

Deep dive reviews are in-depth, objective, independent assurance reviews that focus on a particular portfolio, program or project delivery area of concern, for example, organisational governance, management control, financial management, risk management, stakeholder management, resource management and benefits management. For example, the review may just focus on the business case, forecast benefits, governance or technical aspects such as the software architecture, testing plan or integration risks. These reviews are generally undertaken in response to issues being raised by key stakeholders to the initiative or at the direction of the Accountable Officer and/or investing department.

The review should be undertaken by a minimum of two reviewers, depending upon the scope of the review. An appropriate level of subject matter expertise is required for deep dive reviews. An external vendor may be engaged and the deliverables and timeframe for the review will be outlined in the contract deliverables.

Post implementation reviews

A post implementation review (PIR) is mandatory under this framework and evaluates whether the objectives in the original business case have been fully achieved. It reviews how a project was governed, managed and reported, and assesses whether the project achieved its stated scope, schedule, budget and benefits at the time of operational handover.

The review also considers aspects of the project that went particularly well and those that could have gone better. It considers the risks (threats and opportunities) and issues that could have been avoided had different actions been taken. A post implementation review provides clear actionable recommendations for future projects and is a key input into effective project management. Post implementation reviews provide the basis for building a reference class of data to help inform the accuracy of cost and time forecasting, organisational learning and continuous improvement in benefits management.

A post implementation review is conducted immediately prior to project closure. The review team must be independent of the project and undertaken by reviewers in line with the minimum independence requirements outlined in this framework.

A post implementation review will be undertaken in a similar timeframe to a gated assurance review or health check. Information will be gathered from documentation and interviews and/or workshop sessions.

Recommendations are provided to the department’s project or portfolio management office for follow up and actioning.

Note

A copy of the post implementation review report must be provided to OAI.

Continuous assurance/integrated assurance/ assurance partner services

The initiative may choose to engage the department’s internal audit or a vendor to undertake ongoing assurance for the initiative. These services can be referred to as:

- continuous assurance

- integrated assurance

- assurance partner model/service.

Many of the activities undertaken in these services include project/program assurance activities that are undertaken at the initiative level. For example:

| Areas of focus | Activities |

|---|---|

| Quality assurance of key program/project information and evidence |

|

| Review and monitoring of the assurance plan |

|

| Governance advisory and board attendance |

|

| Risk workshops and related risk activities |

|

| Gated review / health check preparation |

|

Note

The internal auditor or vendor engaged to undertake these assurance activities must not be involved in the creation of any of the initiative deliverables (this would make them a supplier on the initiative and not an assurer), nor should they be involved in any of the day-to-day project management activities undertaken by the project/program manager.

As outlined under assurance planning, if internal audit or a vendor is engaged to undertake continuous assurance/ integrated assurance/assurance partner they cannot be engaged to undertake the gated assurance reviews or health checks for the same initiative.

The initiative roles and responsibilities must clearly define the role of the assurance partner and the activities they will be undertaking. If the assurance partner attends board meetings their role must be included in the board terms of reference. The contract for this service must outline the deliverables for the work.

Review team size, qualifications and review timeframes

The review team for gated reviews and health checks must be certified Gateway Review Team Leaders and Members respectively who are selected for their skillsets and as far as practical match the gate requirements and initiative need, scale and complexity, for example, benefits management specialists.

The number of review team members is influenced by the specialist skills required in relation to each decision point, particularly when reviewing level 3 and 4 initiatives. The table below provides a guide to the number of review team members required for each review.

All review team members must attend all review interviews.

| Assurance level 1 | Review team members required | Review team size |

|---|---|---|

| Level 1 | Minimum two | Gateway team leader and member x 1 |

| Level 2 | Minimum two | Gateway team leader and member x 1 |

| Level 3 | Minimum three | Gateway team leader and member x 2 |

| Level 4 | Minimum four | Gateway team leader and member x 3 |

Review timelines

A gated assurance review and health check involves four main activities:

- planning the review

- document and information review

- undertaking interviews, including at least one debrief with the Accountable Officer prior to issuing the draft report to ensure no surprises when the draft report is received

- preparing the draft and final report.

A gated assurance review and health check is undertaken as a snapshot at a point in time for the initiative. Timing is critical as gated reviews are timed to occur to inform decision making they need to occur within the short window between having the information available for the review team and when the decision needs to be made.

Planning for an assurance review can commence anywhere between 4-8 weeks out from the scheduled review; the amount of time required to plan the review will depend upon individual departments and the difficulty to secure interview times and resources for the review.

Note

Assurance review conduct is important. Reviews are conducted with integrity, fairness and impartiality. Confidentiality, of the information provided in documentation and obtained in interviews, must be always maintained.

Gated assurance review interviews and draft report writing should occur within a timeframe of five business days. Additional time before the review interviews to review project documentation and prepare for the interviews, and after issuing the draft report to finalise the report, is expected.

The review report

The gated assurance and health check review report provides a concise, evidence-based snapshot of an initiative at the time of the review. As a minimum a review report must:

- present clear findings against each of the areas of focus outlined in the relevant Queensland Treasury Gateway Review guide, for example, Gate 1 – Preliminary evaluation, or for a health check the P3M3® perspectives.

- propose clear, actionable, forward-looking, helpful recommendations that will support continued investment decision making and realisation of expected benefits

- provide a clear one-to-one link between a finding and a recommendation so that the context for the recommendation is clearly understood

- rate each recommendation in terms of its urgency and criticality

- provide an overall delivery confidence assessment rating and a concise rationale for the chosen delivery confidence assessment

- commentary on the appropriateness of the assurance profile level

- provide details of any previous assurance reviews undertaken, their delivery confidence assessment and progress toward completion of recommendations

- check if there are any conditions resulting from the investment review process and progress made on these conditions

- include a list of all interviewees, both names, position and roles as well as people requested for interview but were unable to be interviewed

- include a list of all documentation and information that was provided and reviewed as well as documentation requested but not provided

- specify if the report is draft or final

- specify the date of the review

- include the names of all review team members.

Recommendation priority ratings

Each recommendation should be rated as critical, essential or recommended to indicate its priority and provide guidance on the areas requiring the most urgent work.

| Recommendation priority rating | Description | Target resolution timeframe |

|---|---|---|

| Critical (do now) | To achieve success the recommendation should be actioned immediately. | Within 1 to 4 weeks |

| Essential (do by) | The recommendation is important but not urgent. Take action before further key decisions are taken. | Within 4 to 8 weeks |

| Recommended (good practice) | The initiative would benefit from the uptake of this recommendation. | Within 8 to 12 weeks |

Review report rating

Each gated review and health check report will include a delivery confidence assessment (DCA) rating for the initiative. The delivery confidence ratings are listed at Appendix E. A delivery confidence assessment summarises the level of confidence that the review team holds as to whether the initiative is likely to deliver its planned benefits and achieve its objectives. The DCA is used to inform decision making.

Note

It is important that the DCA definitions are understood; a red rating does not mean ‘bad’, rather it indicates urgent action is required by the Accountable Officer in order for the initiative to move forward.

The draft report is provided to the Accountable Officer for factual review for a period of up to five business days. The review team considers any factual feedback on the report and can correct factual or grammatical errors. The report recommendations and delivery confidence assessment are not negotiable. After one week and receipt of any factual feedback the review team issues the final report.

Note

The Accountable Officer does not ‘approve’ or ‘accept’ the report. At no point should review teams be requested to change their delivery confidence assessment, or downgrade findings, recommendations, or ratings on the recommendations.

The final report is delivered to the Accountable Officer, and for level 2, 3 and 4 initiatives, a copy must be provided to OAI within one week of completing the review

Delivery confidence assessment

A five-point DCA rating scale is used for gated assurance and health check reviews:

| Assessment | Definition |

|---|---|

| Green Successful delivery to time, cost and quality appears highly likely and there are no major outstanding issues that at this stage appear to threaten delivery significantly. |

| Green/amber Successful delivery appears probable; however, constant attention is needed to ensure risks do not materialise into major issues threatening delivery. | |

| Amber Successful delivery appears feasible but significant issues exist requiring management attention. These appear resolvable at this stage and, if addressed promptly, should not impact delivery or benefits realisation. |

| Amber/red Successful delivery is in doubt with major risks or issues apparent in a number of key areas. Prompt action is required to address these and establish whether resolution is feasible. | |

| Red Successful delivery appears to be unachievable. There are major issues which at this stage, do not appear to be manageable or resolvable. The project/program may need re-baselining and/or overall viability re-assessed. |